Alice

Xiang

Profile

Alice Xiang is the Global Head of AI Ethics at Sony. As the VP leading AI ethics initiatives across Sony Group, she manages the team responsible for conducting AI ethics assessments across Sony's business units and implementing Sony's AI Ethics Guidelines. In addition, as the Lead Research Scientist for AI ethics at Sony AI, Alice leads a lab of AI researchers working on cutting-edge research to enable the development of more responsible AI solutions. Alice also recently served as a General Chair for the ACM Conference on Fairness, Accountability, and Transparency (FAccT), the premier multidisciplinary research conference on these topics.

Alice previously served on the leadership team of the Partnership on AI. As the Head of Fairness, Transparency, and Accountability Research, she led a team of interdisciplinary researchers and a portfolio of multi-stakeholder research initiatives. She also served as a Visiting Scholar at Tsinghua University’s Yau Mathematical Sciences Center, where she taught a course on Algorithmic Fairness, Causal Inference, and the Law.

She has been quoted in the Wall Street Journal, MIT Tech Review, Fortune, Yahoo Finance, and VentureBeat, among others. She has given guest lectures at the Simons Institute at Berkeley, USC, Harvard, SNU Law School, among other universities. Her research has been published in top machine learning conferences, journals, and law reviews.

Alice is both a lawyer and statistician, with experience developing machine learning models and serving as legal counsel for technology companies. Alice holds a Juris Doctor from Yale Law School, a Master’s in Development Economics from Oxford, a Master’s in Statistics from Harvard, and a Bachelor’s in Economics from Harvard.

Message

“Our AI Ethics research team conducts cutting-edge research on fairness, transparency, and accountability in AI. Our projects aim to enable the development of more ethical AI within Sony and also to contribute to the global research discourse around AI ethics. Our goal is to make Sony a global leader in AI ethics.”

Publications

Despite extensive efforts to create fairer machine learning (ML) datasets, there remains a limited understanding of the practical aspects of dataset curation. Drawing from interviews with 30 ML dataset curators, we present a comprehensive taxonomy of the challenges and trade…

We tackle societal bias in image-text datasets by removing spurious correlations between protected groups and image attributes. Traditional methods only target labeled attributes, ignoring biases from unlabeled ones. Using text-guided inpainting models, our approach ensures …

Deep neural networks trained via empirical risk minimisation often exhibit significant performance disparities across groups, particularly when group and task labels are spuriously correlated (e.g., “grassy background” and “cows”). Existing bias mitigation methods that aim t…

Machine learning (ML) datasets, often perceived as neutral, inherently encapsulate abstract and disputed social constructs. Dataset curators frequently employ value-laden terms such as diversity, bias, and quality to characterize datasets. Despite their prevalence, these ter…

The rapid and wide-scale adoption of AI to generate human speech poses a range of significant ethical and safety risks to society that need to be addressed. For example, a growing number of speech generation incidents are associated with swatting attacks in the United States…

Human-centric computer vision (HCCV) data curation practices often neglect privacy and bias concerns, leading to dataset retractions and unfair models. HCCV datasets constructed through nonconsensual web scraping lack crucial metadata for comprehensive fairness and robustnes…

This paper strives to measure apparent skin color in computer vision, beyond a unidimensional scale on skin tone. In their seminal paper Gender Shades, Buolamwini and Gebru have shown how gender classification systems can be biased against women with darker skin tones. While…

Biases in large-scale image datasets are known to influence the performance of computer vision models as a function of geographic context. To investigate the limitations of standard Internet data collection methods in low- and middle-income countries, we analyze human-centri…

Human-centric image datasets are critical to the development of computer vision technologies. However, recent investigations have foregrounded significant ethical issues related to privacy and bias, which have resulted in the complete retraction, or modification, of several …

Few datasets contain self-identified sensitive attributes, inferring attributes risks introducing additional biases, and collecting attributes can carry legal risks. Besides, categorical labels can fail to reflect the continuous nature of human phenotypic diversity, making i…

The rise of facial recognition and related computer vision technologies has been met with growing anxiety over the potential for artificial intelligence (“AI”) to create mass surveillance systems and further entrench societal biases. These concerns have led to calls for grea…

Speech AI Technologies are largely trained on publicly available datasets or by the massive web-crawling of speech. In both cases, data acquisition focuses on minimizing collection effort, without necessarily taking the data subjects’ protection or user needs into considerat…

Recent interests in causality for fair decision-making systems has been accompanied with great skepticism due to practical and epistemological challenges with applying existing causal fairness approaches. Existing works mainly seek to remove the causal effect of social categ…

As computer vision systems become more widely deployed, there is increasing concern from both the research community and the public that these systems are not only reproducing but amplifying harmful social biases. The phenomenon of bias amplification, which is the focus of t…

We propose to implicitly learn a set of continuous face-varying dimensions, without ever asking an annotator to explicitly categorize a person. We uncover the dimensions by learning on a novel dataset of 638,180 human judgments of face similarity (FAX). We demonstrate the ut…

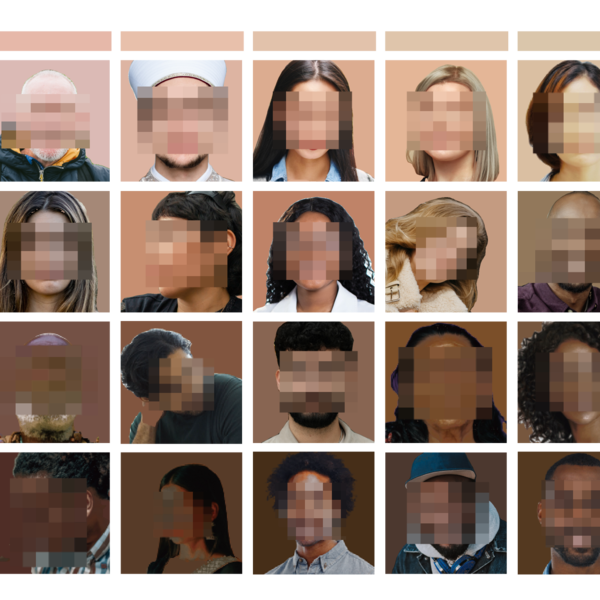

Biases in human-centric computer vision models are often attributed to a lack of sufficient data diversity, with many demographics insufficiently represented. However, auditing datasets for diversity can be difficult, due to an absence of ground-truth labels of relevant feat…

As computer vision systems become more widely deployed, there is increasing concern from both the research community and the public that these systems are not only reproducing but amplifying harmful social biases. The phenomenon of bias amplification, which is the focus of t…

Biases in human-centric computer vision models are often attributed to a lack of sufficient data diversity, with many demographics insufficiently represented. However, auditing datasets for diversity can be difficult, due to an absence of ground-truth labels of relevant feat…

The effects of AI systems are far-reaching and affect diverse commu- nities all over the world. The demographics of AI teams, however, do not reflect this diversity. Instead, these teams, particularly at big tech companies, are dominated by Western, White, and male work- ers…

In recent years, there has been a proliferation of papers in the algorithmic fairness literature proposing various technical definitions of algorithmic bias and methods to mitigate bias. Whether these algorithmic bias mitigation methods would be permissible from a legal pers…

The risk of re-offense is considered in decision-making at many stages of the criminal justice system, from pre-trial, to sentencing, to parole. To aid decision makers in their assessments, institutions increasingly rely on algorithmic risk assessment instruments (RAIs). The…

As calls for fair and unbiased algorithmic systems increase, so too does the number of individuals working on algorithmic fairness in industry. However, these practitioners often do not have access to the demographic data they feel they need to detect bias in practice. Even …

Blog

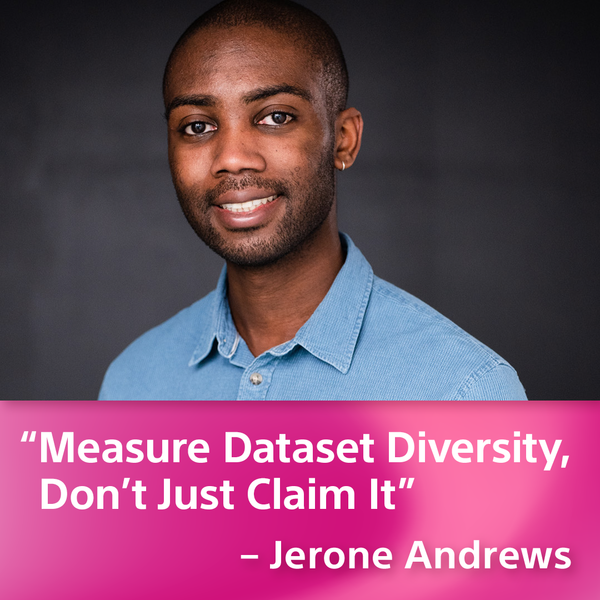

July 27, 2024 | AI Ethics

Ushering in Needed Change in the Pursuit of More Diverse Datasets

Sony AI, Research Scientist, Jerone Andrews’ paper, "Measure Dataset Diversity, Don't Just Claim It", has won a Best Paper Award at ICML 2024. This recognition is a testament to the groundbreaking work being done to improve the re…

Sony AI, Research Scientist, Jerone Andrews’ paper, "Measure Dataset Diversity, Don't Just Claim It", has won a Best Paper Award a…

March 29, 2024 | Life at Sony AI

Celebrating the Women of Sony AI: Sharing Insights, Inspiration, and Advice

In March, the world commemorates the accomplishments of women throughout history and celebrates those of today. The United States observes March as Women’s History Month, while many countries around the globe observe International…

In March, the world commemorates the accomplishments of women throughout history and celebrates those of today. The United States …

January 18, 2024 | Events

Navigating Responsible Data Curation Takes the Spotlight at NeurIPS 2023

The field of Human-Centric Computer Vision (HCCV) is rapidly progressing, and some researchers are raising a red flag on the current ethics of data curation. A primary concern is that today’s practices in HCCV data curation – whic…

The field of Human-Centric Computer Vision (HCCV) is rapidly progressing, and some researchers are raising a red flag on the curre…

December 13, 2023 | Events

Sony AI Reveals New Research Contributions at NeurIPS 2023

Sony Group Corporation and Sony AI have been active participants in the annual NeurIPS Conference for years, contributing pivotal research that has helped to propel the fields of artificial intelligence and machine learning forwar…

Sony Group Corporation and Sony AI have been active participants in the annual NeurIPS Conference for years, contributing pivotal …

September 21, 2023 | AI Ethics

Beyond Skin Tone: A Multidimensional Measure of Apparent Skin Color

-->Advancing Fairness in Computer Vision: A Multi-Dimensional Approach to Skin Color Analysis In the ever-evolving landscape of artificial intelligence (AI) and computer vision, fairness is a principle that has gained substantial …

-->Advancing Fairness in Computer Vision: A Multi-Dimensional Approach to Skin Color Analysis In the ever-evolving landscape of ar…

June 29, 2023 | AI Ethics

New Dataset Labeling Breakthrough Strips Social Constructs in Image Recognition

New Dataset Labeling Breakthrough Strips Social Constructs in Image RecognitionThe outputs of AI as we know them today are created through deeply collaborative processes between humans and machines. The reality is that you cannot …

New Dataset Labeling Breakthrough Strips Social Constructs in Image RecognitionThe outputs of AI as we know them today are created…

April 17, 2023 | AI Ethics

Exposing Limitations in Fairness Evaluations: Human Pose Estimation

As AI technology becomes increasingly ubiquitous, we reveal new ways in which AI model biases may harm individuals. In 2018, for example, researchers Joy Buolamwini and Timnit Gebru revealed how commercial services classify human …

As AI technology becomes increasingly ubiquitous, we reveal new ways in which AI model biases may harm individuals. In 2018, for e…

March 16, 2023 | AI Ethics

Being 'Seen' vs. 'Mis-Seen': Tensions Between Privacy and Fairness in Computer Vision

Privacy is a fundamental human right that ensures individuals can keep their personal information and activities private. In the context of computer vision, privacy concerns arise when cameras and other sensors collect personal in…

Privacy is a fundamental human right that ensures individuals can keep their personal information and activities private. In the c…

May 12, 2021 | AI Ethics

Launching our AI Ethics Research Flagship

I recently joined Sony AI from the Partnership on AI, where I served on the Leadership Team and led a team of researchers focused on fairness, transparency, and accountability in AI. In that role, I had a unique vantage point in…

I recently joined Sony AI from the Partnership on AI, where I served on the Leadership Team and led a team of researchers focused…

JOIN US

Shape the Future of AI with Sony AI

We want to hear from those of you who have a strong desire

to shape the future of AI.