Sony AI at ICLR 2025: Refining Diffusion Models, Reinforcement Learning, and AI Personalization

Events

Sony AI

April 23, 2025

This April, Sony AI will present a wide-ranging portfolio of research at the International Conference on Learning Representations (ICLR 2025). From progressing text-to-sound and video models, to developing more sample-efficient reinforcement learning agents, our teams are focused on solving problems that are a barrier to creative development and utility.

This year’s accepted papers span some of our core Flagship Projects — AI for Creators, Gaming and Interactive Agents, and AI Ethics — and reflect our continued commitment to pushing the boundaries of AI while ensuring it remains practical, performant, and accessible.

Here’s a closer look at the research we’ll be sharing at the conference.

AI for Creators

SoundCTM: Unifying Score-Based and Consistency Models for Full-Band Text-to-Sound Generation

Authors: Koichi Saito, Dongjun Kim (from Stanford), Takashi Shibuya, Chieh-Hsin Lai, Zhi Zhong, Yuhta Takida, Yuki Mitsufuji

Summary: SoundCTM (Sound Consistency Trajectory Model) combines score-based diffusion and consistency models into a single architecture that supports both fast one-step sampling and high-fidelity multi-step generation for audio.

Why this research matters: Creators working in sound design or game development often must make a compromise between speed and quality. SoundCTM helps bridge that gap, enabling a musician to quickly prototype ambient background loops in seconds, then refine them into final-quality output without retraining or switching tools. Also, SoundCTM builds on Sony AI’s prior research in Consistency Trajectory Models (CTM), introduced at ICLR 2024. While CTM introduced a more flexible way to sample from diffusion models by learning how data evolves over time, SoundCTM extends this advancement into the world of full-band audio—combining CTM’s speed and quality tradeoff.

“We extend the CTM framework to audio and propose a new feature distance that uses the teacher network for distillation — no external pretrained features are needed.”

– Authors: Koichi Saito, Dongjun Kim, Takashi Shibuya, Chieh-Hsin Lai, Zhi Zhong, Yuhta Takida, and Yuki Mitsufuji

The result is a single model that supports both 1-step generation for quick sound prototyping and multi-step deterministic sampling for production-quality audio—a crucial need for sound designers and game developers.

Link to paper: SoundCTM: Uniting Score-based and Consistency Models for Text-to-Sound Generation – Sony AI

MMDisCo: Multi-Modal Discriminator-Guided Cooperative Diffusion for Joint Audio and Video Generation

Authors: Akio Hayakawa, Masato Ishii, Takashi Shibuya, Yuki Mitsufuji

Summary: MMDisCo uses a small coordinating network to connect two separate models: one for audio and one for video, so that they generate outputs that match each other in timing and meaning. Instead of retraining everything from scratch, it adds a lightweight system that helps both models stay in sync.

Why this research matters: Multimodal storytelling is foundational to modern media. MMDisCo allows a content creator to combine independently trained sound and video models to generate synchronized voiceovers and visuals — all without retraining large models or compromising quality.

Link to paper: MMDisCo: Multi-Modal Discriminator-Guided Cooperative Diffusion for Joint Audio and Video Generation

Jump Your Steps: Optimizing Sampling Schedule of Discrete Diffusion Models

Authors: Yong-Hyun Park, Chieh-Hsin Lai, Satoshi Hayakawa, Yuhta Takida, Yuki Mitsufuji

Summary: Jump Your Steps is a principled way to reallocate sampling steps in Discrete Diffusion Models—achieving the same quality with fewer steps!

Why this research matters: For applications like generating catchy jingles, stylized captions, or motion graphics, this approach means faster generation without sacrificing consistency—a big win for interactive design tools and apps with real-time constraints.

Link to paper: Jump Your Steps: Optimizing Sampling Schedule of Discrete Diffusion Models – Sony AI

Mining Your Own Secrets: Diffusion Classifier Scores for Continual Personalization of Text-to-Image Diffusion Models

Authors: Saurav Jha, Shiqi Yang, Masato Ishii, Mengjie Zhao, Christian Simon, M. Jehanzeb Mirza, Dong Gong, Lina Yao, Shusuke Takahashi, Yuki Mitsufuji

Abstract: A low-memory, efficient method for continually updating a text-to-image diffusion model using diffusion classifier scores.

Why this research matters: For designers or small teams building personal brand assets, this method enables their AI image generator to "learn" evolving brand language over time — think adapting to seasonal palettes or emerging visual trends — without forgetting past concepts or requiring expensive finetuning. Promising to be a huge timesaver overall.

Link to paper: Mining your own secrets: Diffusion Classifier Scores for Continual Personalization of Text-to-Image Diffusion Models – Sony AI

HERO: Human-Feedback Efficient Reinforcement Learning for Online Diffusion Model Finetuning

Authors: Ayano Hiranaka*, Shang-Fu Chen*, Chieh-Hsin Lai*, Dongjun Kim, Naoki Murata, Takashi Shibuya, Wei-Hsiang Liao, Shao-Hua Sun**, Yuki Mitsufuji**

Summary: HERO efficiently fine-tunes text-to-image diffusion models with minimal online human feedback (<1K) for various tasks.

Why this research matters: Let’s imagine someone wants their chatbot or virtual companion to match a specific tone or style... HERO allows that fine-tuning to happen with minimal interaction, reducing friction and making alignment far more accessible at scale.

Link to paper: Human-Feedback Efficient Reinforcement Learning for Online Diffusion Model Finetuning – Sony AI

Weighted Point Set Embedding for Multimodal Contrastive Learning Toward Optimal Similarity Metric

Authors: Toshimitsu Uesaka, Taiji Suzuki, Yuhta Takida, Chieh-Hsin Lai, Naoki Murata, Yuki Mitsufuji

Summary: This research proposes a powerful alternative to one-point representations in contrastive learning by using weighted point sets, capturing richer and more nuanced similarities between instances. Our method not only aligns with the optimal similarity measure—pointwise mutual information—but also shows strong theoretical guarantees and delivers consistent gains in real-world vision-language tasks.

Why this research matters: In systems that must compare across modalities — such as a digital assistant retrieving visuals from a voice query — this method improves matching accuracy and interpretability, making interactions more natural and inclusive.

Link to paper: Weighted Point Cloud Embedding for Multimodal Contrastive Learning Toward Optimal Similarity Metric

Gaming and Interactive Agents

SimBa: Simplicity Bias for Scaling Up Parameters in Deep Reinforcement Learning

Authors: Hojoon Lee, Dongyoon Hwang, Donghu Kim, Hyunseung Kim, Jun Jet Tai, Kaushik Subramanian, Peter R. Wurman, Jaegul Choo, Peter Stone, Takuma Seno

Summary: SimBa leverages simplicity bias in network design to scale reinforcement learning models while maintaining stable learning dynamics.

Why this research matters: For game developers training AI opponents or racing agents, SimBa offers a path to scale up policies, enabling smarter in-game behavior, without destabilizing training or requiring massive tuning overhead.

Link to paper: SimBa: Simplicity Bias for Scaling Up Parameters in Deep Reinforcement Learning

Residual-MPPI: Online Policy Customization for Continuous Control

Authors: Pengcheng Wang, Chenran Li, Catherine Weaver, Kenta Kawamoto, Masayoshi Tomizuka, Chen Tang, Wei Zhan

Summary: Combines residual learning and model predictive path integral control for real-time, zero-shot control customization. Demo videos and code are available on our website: https://sites.google.com/view/residual-mppi

Why this research matters: Imagine a racing game where the AI adapts to your driving style in real-time. Just a dream, right? Residual-MPPI makes that possible by adjusting the underlying control policy on the fly, without needing full retraining.

Link to paper: Residual-MPPI: Online Policy Customization for Continuous Control – Sony AI

AI Ethics

Authors: Zhiteng Li, Lele Chen, Jerone T. A. Andrews, Yunhao Ba, Yulun Zhang, Alice Xiang

Summary: AI systems often require huge labeled datasets to learn effectively, but gathering and labeling real-world data is slow, expensive, and sometimes impractical. Synthetic data—especially from powerful tools like diffusion models—offers an exciting shortcut, but until now, most systems generated synthetic data without knowing whether it was actually helping…or not.

GenDataAgent changes that. It actively identifies which real examples the model struggles with, then generates targeted synthetic images that fill those gaps. It uses feedback during training, adjusts captions for more variety using LLaMA-2, and filters out unhelpful or noisy samples. Our results show that models trained with GenDataAgent learn faster, generalize better, and treat different data types more fairly.

Why this research matters: Consider a scenario where an AI model is trained to classify rare bird species or foods from different cultures. GenDataAgent can identify the types of images the model struggles with—those that fall near the edge of decision boundaries—and generate new examples that fill in those gaps. This not only improves overall accuracy but also reduces bias, helping the model perform more fairly across all categories. While not tested on medical or biometric data, the same principles could apply to areas where labeled data is scarce or skewed.

Link To Paper: Gendataagent: On-The-Fly Dataset Augmentation With Synthetic Data

Workshop

Workshop: Navigating Data Challenges in Foundation Models

Full title: 2nd Workshop on Navigating and Addressing Data Problems for Foundation Models (DATA-FM)

April 27 · Hall 4 #4 · 6 p.m. PDT

What it’s about:

As foundation models (FMs) grow more powerful, the data that trains them is becoming just as important — and complicated. This workshop dives into the real-world challenges behind the scenes: things like dataset quality, attribution, copyright, and how to ensure fair, transparent, and effective model training at scale.

Why it matters:

Sony AI researcher, Jerone Andrews, joins a cross-disciplinary group of leaders tackling the biggest data questions in AI today. From synthetic data to community-driven curation, this session is about building smarter foundations for future models.

Workshop Details:

- 2nd Workshop on Navigating and Addressing Data Problems for Foundation Models (DATA-FM)

- DATA-FM Workshop @ ICLR 2025

Additional Work From Our Chief Scientist, Peter Stone:

Full Title: Longhorn: State Space Models are Amortized Online Learners

Authors: Bo Liu, Rui Wang, Lemeng Wu, Yihao Feng, Peter Stone, and Qiang Liu.

Full Title: Learning a Fast Mixing Exogenous Block MDP using a Single Trajectory

Authors: Alexander Levine, Peter Stone, and Amy Zhang.

Code is available at: https://github.com/midi-lab/steel.

Full Title: Learning Memory Mechanisms for Decision Making through Demonstrations

Authors: William Yue, Bo Liu, Peter Stone

Code is available at: https://github.com/WilliamYue37/AttentionTuner

Looking Ahead

From advancing audio and video models to reinforcement learning and personalization, our accepted research at ICLR 2025 reflects our ongoing commitment to advancing the science of AI while building tools that can empower creators, developers, and users alike.

Whether it's enabling artists to craft full-band soundscapes with greater control, helping game developers create more responsive and adaptive agents, or making it easier for users to personalize generative models without risking their privacy, these innovations point to a future where AI is more capable, more accessible, and more aligned with human needs.

We’re proud to share this work with the global research community and look forward to the conversations and collaborations it will spark during ICLR 2025 and beyond.

Latest Blog

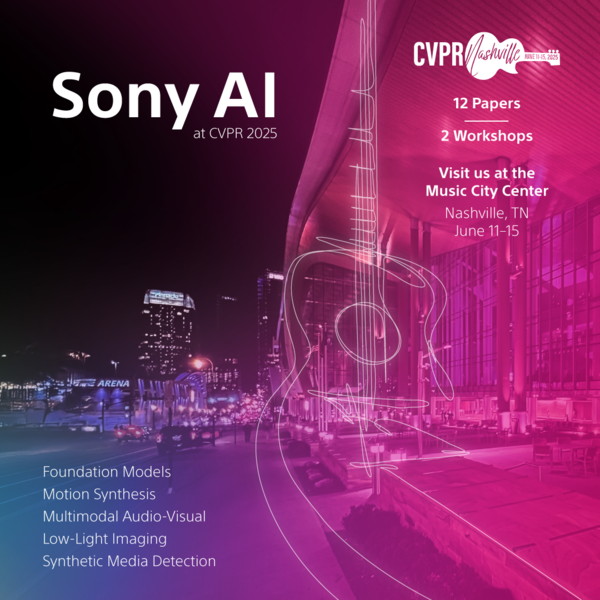

June 12, 2025 | Events, Sony AI

Research That Scales, Adapts, and Creates: Spotlighting Sony AI at CVPR 2025

At this year’s IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR) in Nashville, TN Sony AI is proud to present 12 accepted papers spanning the main conference and…

June 3, 2025 | Sony AI

Advancing AI: Highlights from May

From research milestones to conference prep, May was a steady month of progress across Sony AI. Our team's advanced work in vision-based reinforcement learning, continued building …

May 1, 2025 | Sony AI

Advancing AI: Highlights from April

April marked a standout moment for Sony AI as we brought bold ideas to the world stage at ICLR 2025. This year’s conference spotlighted our work across generative modeling, multimo…